I’m working through Programming Computer Vision with Python: Tools and algorithms for analyzing images, which covers various mechanisms for determining corresponding methods to match points of interest between two interest. In the book, this eventually builds up to an instruction on how to reconstruct a panorama.

The first technique for finding corresponding points of interest looks for corners in a region, notes the pattern of corners, and finds near matches, using matrix algebra. The algorithm works like this:

- Convert an image to grayscale

- For each pixel (or small region), take the derivative in the x and y dimensions

- Make a 1×2 matrix of the dx and dy derivatives

- Multiply the matrix by it’s transpose

- Multiply the matrix by a gaussian filter (so that results for a pixel can be influenced by neighors)

- Compute the eigenvalues for the matrix

- If there are two large eigenvalues there is a corner; and if one an edge

This provides you a way to find corners and edges within an image, and involves quite a bit of math. Thankfully the authors provide their source code on github. The examples in the book are a good introduction to how to set up these scenarios, but they don’t provide as much guidance as I’d like on choosing algorithms, so I’m working up samples using my own data.

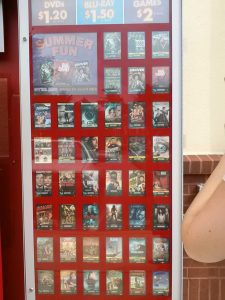

To test this technique, I tried generating related points between three images. Two are cell phone pictures of a Redbox (a movie rental kiosk), and the third is a mask I made, to see if this could help line it up over the phone images. There are better ways to do that, but part of the purpose of this is to test this algorithm and see what it looks like when it fails.

The code belows shows how to orchestrate this process. There is only one tuning parameter, which represents the size area you want to use for corner detection.

The algorithm couldn’t compute points at all between the mask and the two images like I’d hoped. I suspect this is an issue with ambiguity – since it’s looking at localized sections, it’d be very difficult to distinguish which of many corners the edge of a movie pointed to.

redbox1 = """G:\images\movies\\redbox1.jpg"""

im1 = array(Image.open(redbox1).convert('L'))

redbox2 = """G:\images\movies\\redbox2.jpg"""

im2 = array(Image.open(redbox2).convert('L'))

wid = 10

harrissim1 = compute_harris_response(im1, wid)

filtered_coords1 = get_harris_points(harrissim1, wid + 1)

d1 = get_descriptors(im1, filtered_coords1, wid)

harrissim2 = compute_harris_response(im2, wid)

filtered_coords2 = get_harris_points(harrissim2, wid + 1)

d2 = get_descriptors(im2, filtered_coords2, wid)

print 'starting matching'

matches = match_twosided(d1, d2)

figure()

gray()

plot_matches(im1, im2, filtered_coords1, filtered_coords2, matches)

show()

It also turns out that comparing two similar images of this complexity doesn’t work at all- it took hours to finish, and it matches seemingly random points.

All is not lost though. If we could extract the movie covers from the cell phone image, we could compare them to known images, to figure out what they are. As a second experiment, I cropped one from the cell phone picture, and scaled it up to match a higher resolution image of the actual cover. In the images below, the lines between the images again represent areas that are thought to match, based on corner descriptors. In each image, you can see that most of the lines are close to horizontal, which appears to be a pretty good test of accuracy (they are not exactly horizontal due to issues with non-exact scaling and rotation).

The follow images represent the results when you compare areas of 5 x 5, 10 x 10, 20 x 20, 30 x 30, 40 x 40 and 50 x 50. The number of matches drops off quickly around 30 x 30; the time it takes to generate each is also vastly less at each size up. The smallest sizes took around fifteen minutes on my machine, compared to a two seconds for the last.

By comparison, I also tried non-matching covers. The algorithm still finds what it claims are “matching” points, but the vast majority are non-horizontal lines. This suggests that one could test if two images are of the same movie simply by testing the randomness of the angles, a valuable step forward.

In the future I intend to look at other algorithms to improve the accuracy (e.g SIFT, RANSAC), and will work toward being able to detect if a particular movie cover is included in the entire cell phone image. You may also find my last article of interest, discussing how to find boundaries around sections of these images.

Enjoyed the read, thanks!

Glad you liked it!

Any improvement if you filter cell phone pic leaving mainly red and then do corner detection?